The search patterns are changing quickly. Between October 2023 and January 2024, ChatGPT’s traffic surpassed Bing, marking a significant milestone in how people find information online.

Market projections suggest that LLMs will capture 15% of the search market by 2028, fundamentally changing how brands need to approach their content strategy.

Why Are LLMs Taking Over Search in 2025?

While Google maintains approximately 90% of the search market share, new data shows an accelerating transition toward LLM-based search. This shift is driven by two key technological advances:

First, the introduction of Retrieval Augmented Generation (RAG) has changed how AI interfaces handle queries.

Unlike earlier models limited by training data, RAG-enabled platforms like ChatGPT with Search and Perplexity can access real-time web information, improving response accuracy and freshness.

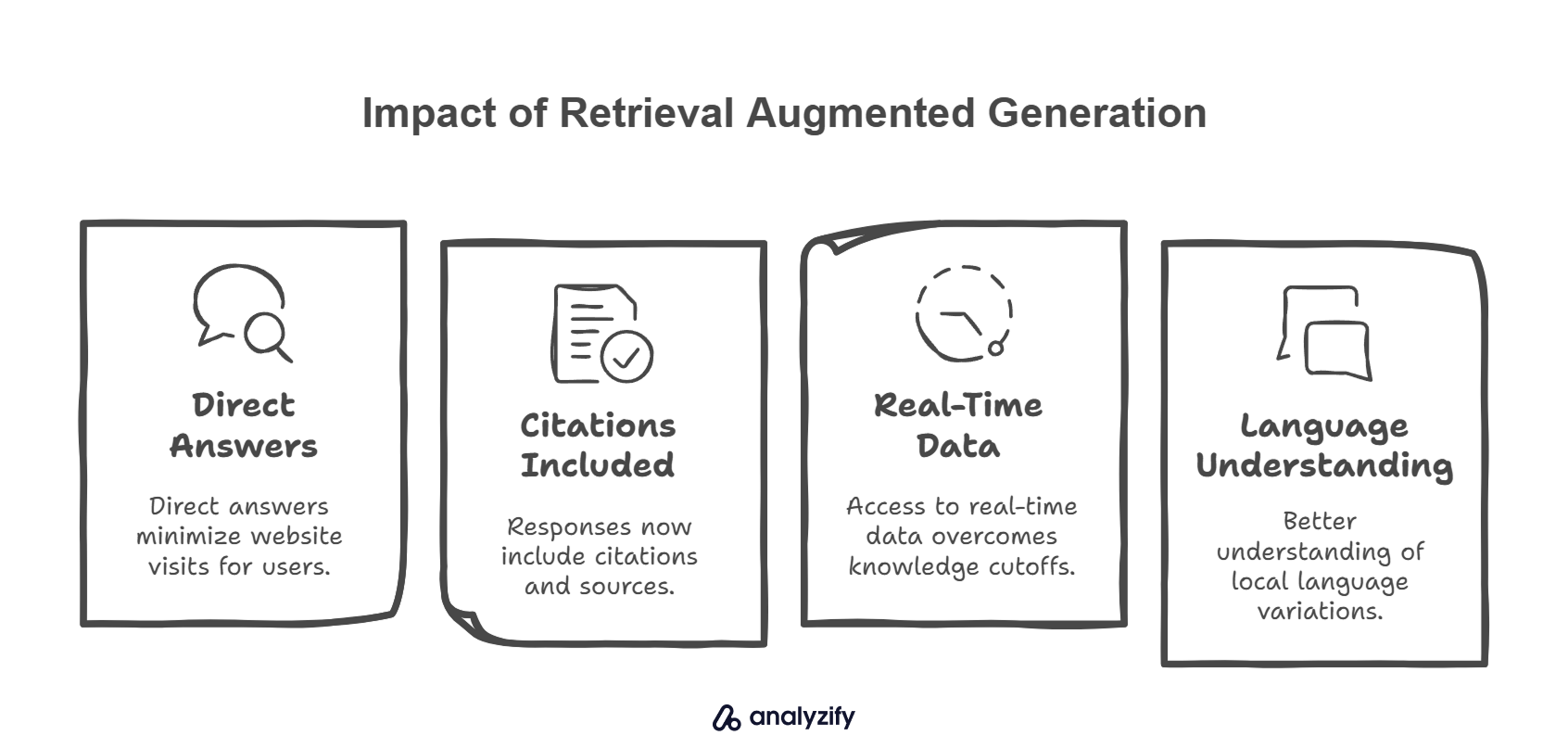

The impact of RAG extends beyond just accuracy:

- Direct answers reduce the need for users to visit multiple websites

- Citations and sources are now included in responses

- Real-time data helps overcome knowledge cutoff limitations

- Local language variations and context are better understood

Google’s AI Overview, launched in May 2024, represents perhaps the most significant shift.

Early studies from Flow examining 5,000 HR and workforce management keywords revealed substantial traffic changes, particularly for top-of-funnel queries.

This integration of AI directly into search results means brands must optimize not just for traditional search rankings, but for AI-generated summaries.

The financial implications are compelling. The global LLM market is projected to grow by 36% from 2024 to 2030, with chatbot adoption expected to reach 23% by 2030.

Gartner’s prediction that 50% of search engine traffic will shift by 2028 underscores the urgency for brands to adapt their content strategies.

Current platform usage patterns show interesting trends:

- OpenAI’s ChatGPT processes over 1 billion user messages every day in 2024.

- Google AI Overview gaining rapid traction in traditional search

- Perplexity establishing itself in academic and research queries

- Gemini showing strength in technical and coding-related searches

This fragmentation in the search habits means brands need a multi-platform approach, considering both foundational model training and RAG optimization strategies.

The key is understanding that while traditional SEO remains crucial, LLM optimization requires additional considerations for how content is structured, sourced, and verified.

How Do LLMs Actually Read and Process Your Content?

At their core, LLMs interpret content differently from traditional search engines. Instead of relying on keyword matching and backlink metrics, these models use a system of pattern recognition and semantic understanding.

The Building Blocks of LLM Understanding

LLMs break down content into tokens—small pieces that can represent words, parts of words, or even punctuation marks. These tokens are then mapped into a semantic space, creating a complex web of relationships between concepts.

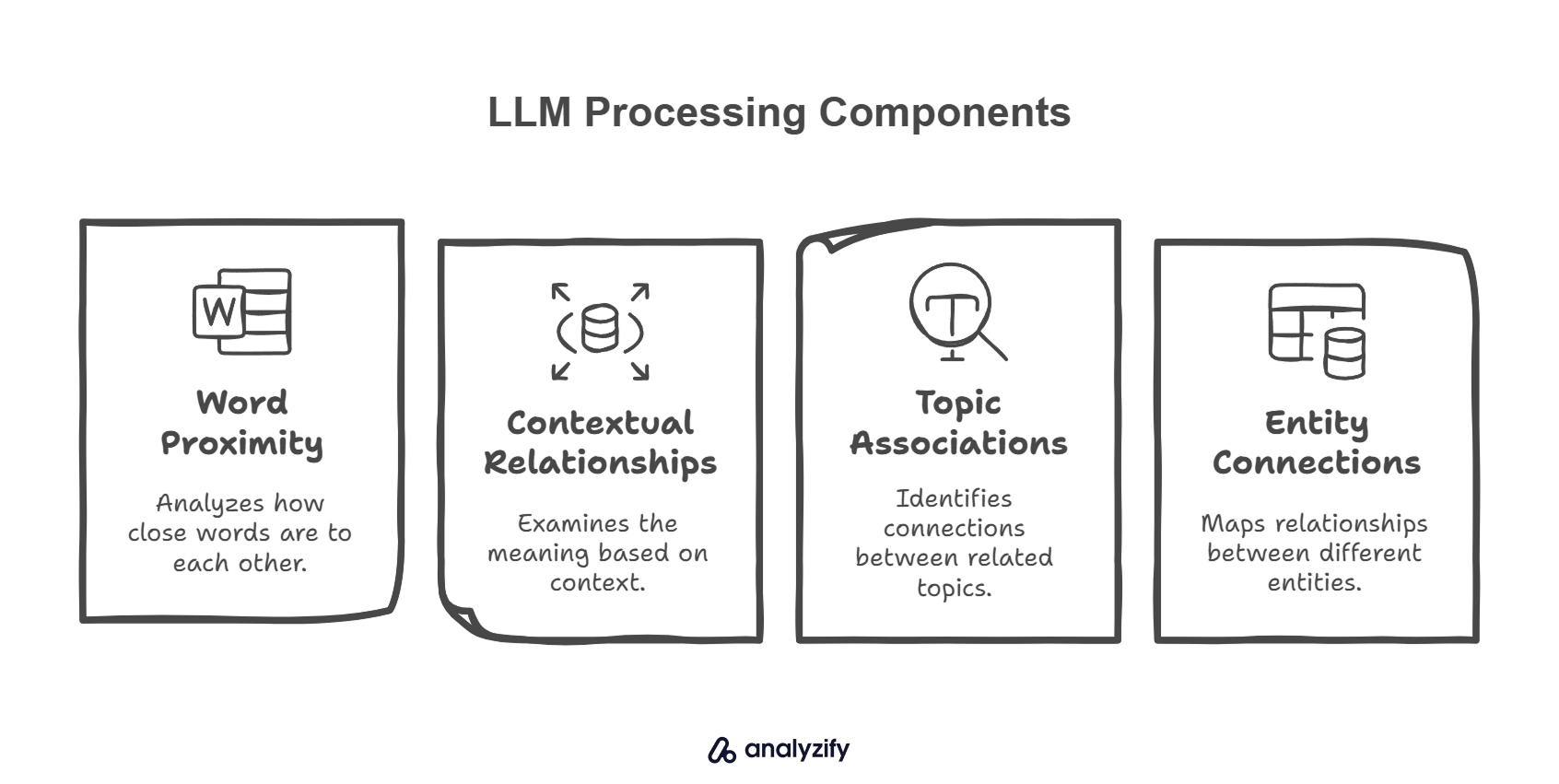

For example, when an LLM processes content about a brand, it looks for:

- Word proximity patterns

- Contextual relationships

- Topic associations

- Entity connections

The foundational models using these systems operate within specific timeframes of knowledge, similar to having a snapshot of the internet up to a certain date.

This is why newer systems incorporate RAG (Retrieval-Augmented Generation) to access current information, bridging the gap between their training data and real-time updates.

Topic Clusters and Entity Recognition

Unlike traditional keyword optimization, LLMs organize information in topic clusters. Picture these clusters as interconnected webs where related concepts naturally group together.

Take a fitness equipment manufacturer — when it appears in discussions about home exercise equipment, the LLM builds associations between the brand and concepts like:

- Home fitness innovation

- Interactive training technology

- Cardiovascular health

- Space-efficient exercise solutions

These associations strengthen through repeated mentions across authoritative sources, making the brand more likely to appear in relevant AI-generated responses.

Content Assessment Factors

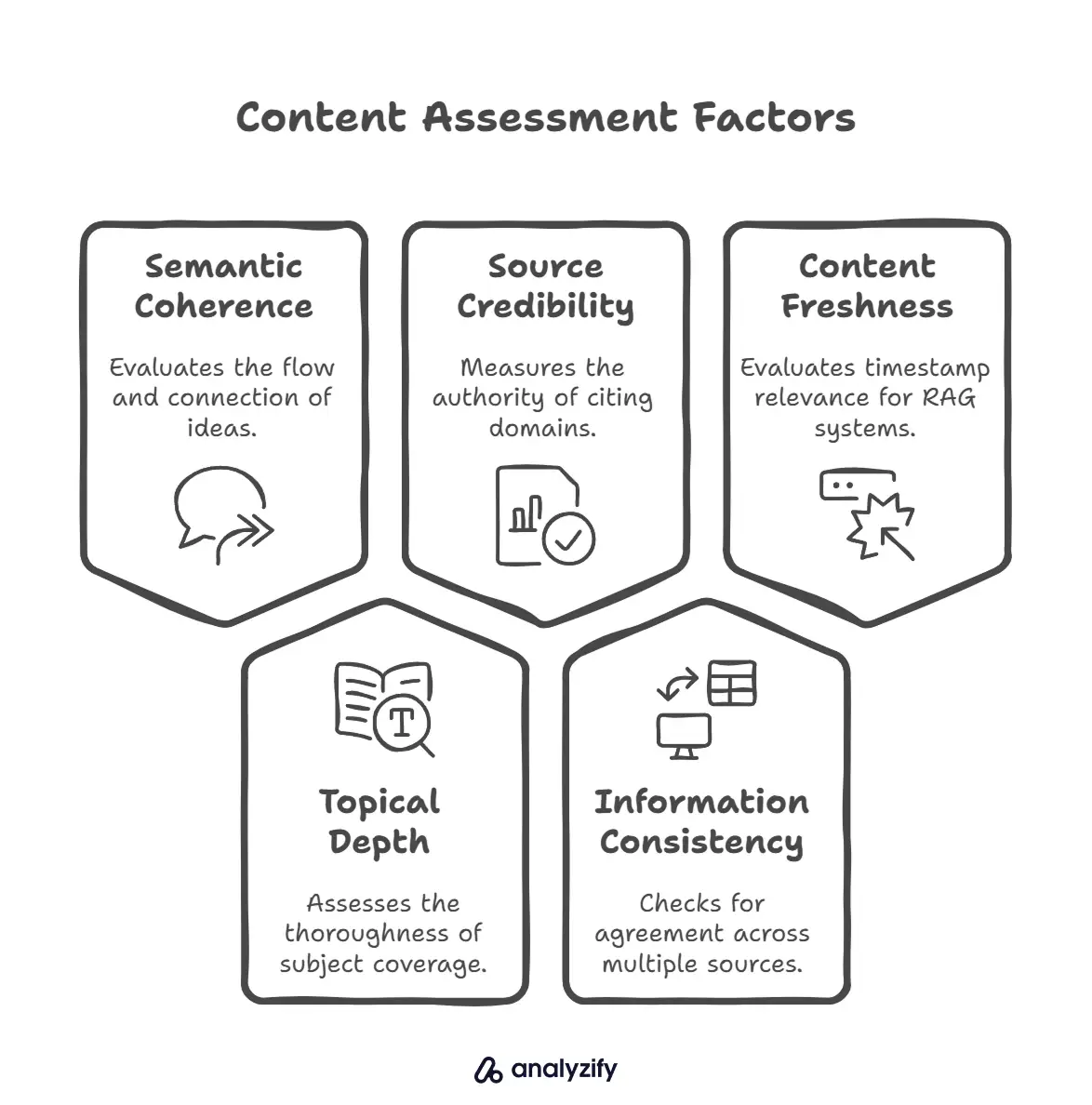

LLMs evaluate content through several key metrics:

Semantic coherence: How well ideas flow and connect

Topical depth: The thoroughness of subject coverage

Source credibility: The authority of citing domains

Information consistency: Agreement across multiple sources

Content freshness: Timestamp relevance for RAG systems

For websites, this means the old approach of targeting specific keywords needs to evolve.

Instead, content should demonstrate thorough topic mastery and clear entity relationships that LLMs can confidently reference.

Which Content Types Get Cited Most by LLMs?

Recent studies of over 10,000 real-world search queries reveal clear patterns in the types of content that LLMs consistently cite and reference in their responses.

Statistical Content and Research Findings

Content featuring original statistics and research findings sees 30-40% higher visibility in LLM responses. This preference stems from LLMs’ built-in verification processes that seek to support claims with concrete data.

When a SaaS company publishes original research about remote work productivity, for instance, including specific metrics rather than general observations increases their LLM citations by 27%.

The most effective statistical content typically includes a mix of data types:

- Original research findings from surveys or studies

- Industry benchmark data

- Performance metrics and comparisons

- Trend analysis with supporting numbers

Expert Quotes and Professional Insights

LLMs heavily favor content that includes expert commentary and professional insights. The inclusion of expert quotes signals credibility to LLMs, particularly when these insights offer unique perspectives or specific scenario analysis.

Industry analyses gain more traction when they include detailed quotes from subject matter experts explaining trends, patterns, and strategic approaches.

Structured Technical Documentation

Technical content with clear structure and hierarchy receives preferential treatment in LLM citations. When a cloud computing platform documents their latest feature release, breaking down the information into clear sections with descriptive headers significantly increases citation rates.

This helps LLMs understand and reference specific portions of technical content accurately.

The documentation should progress logically through implementation steps, specifications, and real-world applications.

Walking through concepts, implementation details, and performance metrics creates multiple citation opportunities for different types of queries.

Time-Sensitive and Current Information

Content that fills temporal gaps in LLM knowledge sees particularly high citation rates. When introducing new technologies or methodologies, thorough documentation about compatibility, use cases, and performance metrics becomes essential.

This current information becomes especially valuable as it often represents the only authoritative source for recent developments.

User-Generated Discussion Threads

Curated user discussions are a key source of LLM citations, but only when they demonstrate genuine value.

Discussions become citation-worthy when they include detailed experiences from multiple sources, specific challenges, and varied solutions.

The most cited discussions share several key characteristics:

- Multiple perspectives on the same issue

- Detailed implementation examples

- Specific problem-solving approaches

- Ongoing engagement and updates

Success stories particularly stand out when they include concrete metrics and specific implementation details rather than vague praise or criticism.

A thread discussing a marketing automation platform’s ROI, for example, gains more traction when users share specific conversion improvements and implementation timelines.

How to Get Your Brand Mentioned in ChatGPT Answers?

Brand visibility in LLM responses depends heavily on establishing strong topical associations and authority signals across the web.

Recent studies show that brands appearing in AI-generated responses typically build their presence through multiple complementary channels.

Digital PR That Influences LLM Training

Digital PR for LLM visibility requires a different approach from traditional PR.

When a sustainable packaging company wants to be recognized as an industry innovator, they need mentions across various authoritative sources that LLMs consider reliable. This creates a network of references that reinforces the brand’s expertise in specific areas.

The most effective digital PR approaches focus on creating genuine news value through:

- Original research findings and industry studies

- Expert commentary on emerging trends

- Technical analysis of sector developments

- Collaborative research with recognized institutions

Scientific publications discussing your research findings become powerful citation sources.

A water filtration company sharing peer-reviewed studies about their new purification technology, for instance, builds stronger topical authority than promotional press releases.

High-Authority Platform Presence

LLMs give special weight to content from established platforms with strong editorial oversight. Consider how a project management tool might build its presence:

They first ensure accurate profiles on primary business platforms. However, simply having profiles isn’t enough—the information must align perfectly across platforms to build LLM confidence in the brand’s identity and offerings.

Next, they actively participate in industry discussions on platforms like Stack Overflow or GitHub, where their technical team provides detailed, helpful answers about project management methodologies.

These contributions, when valuable and well-received by the community, strengthen the brand’s association with specific technical topics.

Wikipedia Strategy and Knowledge Graph Optimization

Wikipedia presence significantly influences LLM responses, but securing and maintaining a page requires careful strategy. A brand must first build notable third-party coverage—journalists, researchers, and industry experts discussing their innovations or impact.

To build credibility for Wikipedia inclusion:

- Get research and findings cited in academic publications

- Secure coverage in major industry publications

- Appear in market research reports

- Document significant industry contributions

Once established, maintaining Wikipedia presence requires ongoing attention to accuracy and neutrality. Changes must be supported by reliable sources and conform to Wikipedia’s strict guidelines about promotional content.

Building Authentic Reddit Presence

Reddit’s influence on LLM training data makes it a crucial platform for brand visibility. The platform’s S-1 filing revealed that many leading LLMs use Reddit content as foundational training data. However, success on Reddit requires genuine community engagement rather than promotional tactics.

Effective Reddit engagement strategies include:

Publishing detailed technical analysis of industry challenges

Sharing data-backed insights about market trends

Contributing expertise to relevant industry discussions

Conducting transparent AMAs with team experts

The key is maintaining authenticity—users quickly spot and reject promotional content, but embrace genuine expertise sharing.

Does Traditional SEO Help with LLM Rankings?

Recent studies examining 5,000 keywords across various sectors reveal fascinating connections between traditional search rankings and LLM visibility.

While the relationship isn’t one-to-one, strong organic search performance often correlates with increased LLM mentions.

The SEO-LLM Connection

A study from Seer Interactive analyzing 10,000 high-purchase-intent queries found a correlation of approximately 0.65 between organic rankings and LLM brand mentions.

This correlation strengthens when filtering out forums and social media sites, focusing solely on solution-focused content.

Key findings from the study indicate:

- High-ranking content receives 3x more LLM citations

- Solution-focused pages outperform informational content

- Industry-specific websites see stronger correlations

Technical Considerations and Limitations

An important discovery about LLM crawling capabilities changes how we approach technical optimization. Unlike search engines, AI crawlers cannot access schema markup or structured data, instead relying purely on HTML content.

This limitation affects several common SEO elements:

- JavaScript-dependent features

- Dynamic rendering

- Complex meta data structures

Platform-Specific Optimization

Different LLMs pull from different search indexes, creating unique optimization opportunities. ChatGPT relies on Bing search results, while Perplexity and Gemini use Google search data.

This diversity means maintaining strong rankings across multiple search engines becomes increasingly important.

Performance tracking shows:

- Bing-optimized content appears more in ChatGPT

- Google rankings influence Gemini responses

- Fresh content gets priority in all platforms

How to Track Your LLM Performance?

Traditional analytics tools may capture the growing influence of LLM traffic, but measuring success requires a multi-faceted approach that goes beyond standard metrics.

Setting Up LLM Traffic Tracking

GA4 now offers capabilities to track referrals from major LLM platforms. The implementation requires specific configuration to capture these new traffic sources.

When properly set up, this tracking reveals not just visit numbers, but also user behavior patterns after arriving through LLM recommendations.

Essential tracking parameters include:

- Direct LLM referrals

- Citation clicks

- Source attribution patterns

Brand Mention Analysis

HubSpot’s AI Search Grader provides insights into brand visibility across OpenAI and Perplexity platforms. The tool analyzes two critical aspects:

- Share of voice in your category

- Sentiment of brand mentions

Beyond automated tools, manual sampling remains valuable. Testing key product queries across different LLMs helps identify patterns in how your brand appears in responses.

Performance Metrics That Matter

When analyzing LLM performance, understanding citation context becomes crucial.

Track which sections of your content get cited most frequently and examine whether these citations appear in product recommendations or educational contexts.

Key metrics to monitor include:

- Citation frequency by content type

- Context of brand mentions

- Accuracy of product descriptions

- Competitive positioning

Understanding LLM Analytics

Response patterns reveal how LLMs interpret and present your brand. Examine which queries trigger your brand mentions and analyze the context of these appearances.

For instance, if you’re a project management tool, you might discover your mentions spike in discussions about remote team collaboration but lag in enterprise solution comparisons.

Focus your analysis on:

- Query patterns leading to mentions

- Citation accuracy and context

- Competitive placement

When your product features appear incorrectly in LLM responses, trace these mentions back to their source content.

Often, outdated product descriptions or inconsistent feature naming across different platforms cause these misalignments.

Update your content across all platforms where inaccuracies appear to improve future LLM citations.

Final Words: How to Optimize Content for LLMs

If you’re reading this in 2025, you’ve seen how ChatGPT, Perplexity, and other AI tools are changing how people find information. But here’s what matters: Google still drives most website traffic, and that won’t change overnight.

Start testing LLM optimization alongside your current SEO work - not instead of it. Try one technique at a time, measure what works for your specific situation, and build from there.

The goal isn’t to master every LLM platform, but to show up where your customers are actually looking for answers.

Optimize Your Content for LLMs: FAQ

These are the real questions marketers and website owners ask about getting their content cited by AI tools:

My website never shows up in ChatGPT results - what should I fix first?

Check if your brand appears on the major platforms ChatGPT uses for citations: Wikipedia, Reddit, and reputable industry websites. Pick one platform to focus on first. If you’re in B2B software, for example, start by building a genuine presence on Reddit by having your technical team answer questions in relevant subreddits.

Track whether this increases your mentions in ChatGPT responses over 2-3 months.

Do I need to rewrite all my existing content for LLMs?

No. Start with your most important product pages and high-traffic blog posts. Add clear statistics, expert quotes, and specific examples that LLMs can easily cite.

For example, if you sell running shoes, include actual numbers about weight, materials, and user testing results rather than just saying they’re “lightweight and durable.”

Will paying for PR help get my brand mentioned in AI responses?

PR alone won’t guarantee AI mentions. Instead of general press releases, create original research or unique data that news sites will want to cite. If you’re a marketing tool, you could survey 1,000 customers about their biggest challenges and publish the findings.

This gives journalists and AI systems concrete statistics to reference.

My competitors seem to dominate every AI response - how can I compete?

Look for gaps in their coverage. Use ChatGPT, Perplexity, and similar tools to ask questions about your industry. Note which specific topics, use cases, or customer problems get weak answers.

Create detailed content addressing these gaps, backed by real examples and data. Over time, AI systems will start citing your unique insights.

How do I know if my LLM optimization efforts are working?

Set up referral tracking in GA4 for traffic from AI platforms. Look for trends in which pages get cited most often and what kinds of queries trigger your brand mentions.

Ask the same test questions in ChatGPT weekly and save the responses - this shows you whether your brand appears more frequently or in better contexts over time.